Summary: The tech industry often leans heavily on analytics, potentially sidelining the nuanced insights from qualitative methods. This article underscores integrating more qualitative methods into your research practices. It introduces the 200,000 users per condition benchmark, emphasizing its role in making analytics more meaningful when combined with qualitative testing.

In my last four jobs, I've worked at tech companies that were either startups or specialized in niche products for complex tasks—both types of companies catered to smaller user bases. However, many tech organizations heavily lean on self-reported satisfaction metrics and analytics in today's fast-paced, shortcut-taking environment. This reliance made me wonder: Are we consistently interpreting our data correctly? With many taking their process cues from tech giants like Google, I'm concerned organizations are placing undue trust in flawed data analytics, resulting in biased design decisions from misleading insights.

The Ambiguous Nature of Analytics in Tech

While data plays a crucial role in decision-making, the accuracy and integrity of this data are often questionable. On a surface level, the continuous inflow of data gives the impression of a constantly updated source of insights, shaping design strategies and influencing product directions. However, a closer look reveals potential misinterpretations and missteps in data methodology.

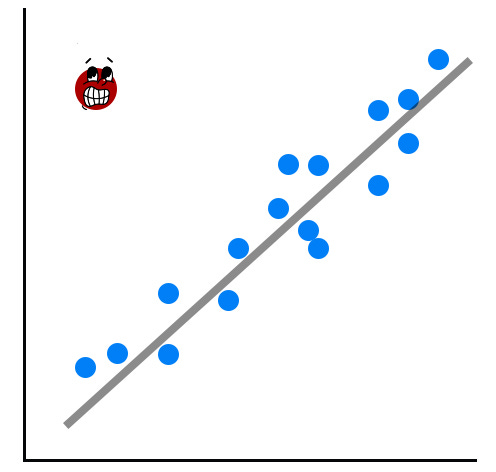

Jakob Nielsen recently published an article titled "Replication Crisis in Website Analytics: The Trouble with UX Stats." In this piece, he discusses ideas I've often felt to be true in my career, even though I hadn't seen concrete data supporting them. Dr. Nielsen's article highlights how the broader UX field has misunderstood certain fundamental statistical concepts. For instance, we shouldn't automatically assume user data follows a normal distribution or bell curve. He also delves into the impact of outliers on our analytics. Furthermore, he emphasizes the likelihood that many have been basing their design decisions on flawed data analytics and misleading results.

NOTE: I recommend reading the article by Jakob Nielsen, linked here, for context before reading on. In it, you'll find a ton of statistics and referenced studies illustrating how tech organizations often misinterpret self-reported data and incorrectly analyze quantitative data.

Drawbacks of Over-reliance

The concerns mentioned earlier suggest that while data is often accurate, it can sometimes be misleading or even incorrect. A common problem is distinguishing between false positives and false negatives. Introducing AI into the mix adds another layer of complexity. Given AI's ability to rapidly generate numerous variations and the perceived cost-effectiveness of analytics-driven testing, there's a tendency to assess many ideas without adequate screening. Proper screening includes:

Ensuring each variation aligns with the company's strategic goals.

Grasping the potential impact on user experience.

Evaluating the feasibility of implementation.

NOTE: I'll dive deeper into how AI can be leveraged to help UX professionals screen properly in future articles. Stay tuned!

Preliminary qualitative methods like heuristic reviews, user interviews, and moderated usability testing help filter ideas. This ensures that only the most relevant and promising design variants make it to the testing phase, reducing the risk of false positives and saving resources in the long run.

Testing multiple potentially ineffective design variants and the inevitable random errors can lead to a surge in false positives. If a continuous stream of bad ideas is being tested without a rigorous screening process, the likelihood of making misinformed decisions increases by a lot. For more context on how I approach research errors, refer to the article titled "Type 1 vs. Type 2 Errors in UX Research."

Finding the Middle Ground

Don't get me wrong, this doesn't imply that analytics lacks value. When utilized with understanding and caution, especially for platforms with vast user bases and significant financial implications, analytics can provide in-depth insights.

In my experience, interpreting analytics is the only real way to measure minor differences between design options.

Yet, it's crucial to recognize when to pivot from mainly gathering qualitative data to quantitative data. For technology with small user bases or those aiming for broad usability improvements, qualitative usability testing can be more beneficial. Engaging with actual users to get real-world feedback offers a clearer picture of how design decisions should be shaped.

A Rule of Thumb for Using More Analytics

I recently came across the "200,000 users per condition" benchmark mentioned by Jakob Nielsen in his article "Replication Crisis in Website Analytics: The Trouble with UX Stats." Upon further investigation, I realized that this number isn't arbitrary. It's based on the premise that as user bases grow, the marginal errors in analytics tend to shrink, providing more reliable and statistically significant results. With a sample size of around 200,000 users per condition being tested, organizations are more likely to detect even minor performance differences between designs, variants, or features. Smaller sample sizes might miss detailed data, resulting in incorrect conclusions.

Also, larger sample sizes help reduce the effects of unusual data points. Unexpected values (outliers) can distort results in small samples, but their influence is less in bigger samples. Therefore, 200,000 is a useful minimum to reduce these distortions. This is the problem I see most UX researchers make when looking at user analytics.

This is especially important for early-stage validation research. If you are a UX professional at a startup or a product in its infancy, reaching the 200,000 users benchmark can be a distant milestone. In my career, I've noticed that research methods like moderated usability tests are much more effective in these early stages, offering actionable insights when quantitative data is sparse.

The 200,000 users per condition rule serves as a pragmatic checkpoint for organizations, marking the stage where analytics can be adopted as a co-pilot alongside qualitative testing methods. By respecting this benchmark and balancing quantitative and qualitative insights, organizations pave the way for informed, user-centric design decisions.

Practical Steps Forward

So what should UX teams for tech with less than 200,000 users per condition do? To counter the potential pitfalls of analytics, consider a dual approach:

Evaluation & Validation: Implement replication experiments and A/A tests. Replicate past tests to see if the findings hold up. If the results raise concerns, assess your methodology. Refinement and retesting are key.

Screen & Streamline: Insert a robust screening process between ideation and testing. Before committing to any analytics test, allow ideas to undergo heuristic review or moderated usability test.

In essence, the aim is to make informed decisions based on reliable data and to execute fewer but more accurate analytics experiments.

Conclusion

Data and analytics, while powerful, have their limitations. As UX practitioners, understanding these limitations and being prepared to challenge assumptions based only on a single set of analytics is essential.

The true value of analytics doesn't solely lie in its inherent qualities but also in its informed application. The goal should be to use data to inform and guide, rather than dictate, our path forward.