Summary: This is a comprehensive article addressing most of my thoughts on the PURE method and its adoption within the UX field.

A subscriber recently asked me for more information on the advantages of transitioning from heuristic reviews to the PURE method, hoping to persuade their boss to experiment with this approach. In an earlier article titled "Are PURE Evaluations Better Than Heuristic Reviews," I advocated for the PURE (Pragmatic Usability Review by Experts) method, particularly for in-house UX teams of three or more members. I recognize that my earlier writings might not have provided a comprehensive set of arguments. This article further examines the core principles that underscore why I support the PURE method so much. I hope these insights prove invaluable for anyone considering the integration of PURE reviews into their team's everyday work. But before diving deep, let's first outline the PURE method to ensure we're all on the same page.

Understanding the PURE Method and How to Conduct a PURE Review

What is the PURE Method?

PURE is a predictive, quantitative usability inspection method used in UX research. Unlike traditional heuristic evaluations that provide a general overview of usability, the PURE method offers a task-based approach, evaluating and scoring a product or interface based on the expected difficulty level a user might face while completing specific tasks.

The essence of PURE lies in its ability to measure task difficulty based on cognitive load and other usability friction points. It's a forward-thinking approach that provides actionable insights, allowing UX professionals to track usability improvements over time.

What is a PURE Review?

A PURE review is the process of conducting an evaluation using the PURE method. It involves:

Task Breakdown: The product or interface is examined based on specific user tasks. Each task is broken down into a series of steps a user would typically undertake.

Expert Rating: UX experts then rate each step on a user friction scale. This scale measures the expected difficulty a user might face at each step. For instance, a step might be rated as easy, moderate, or difficult based on cognitive load, potential for error, and other usability factors.

Quantitative Scoring: The ratings are then translated into numerical scores. These scores provide a quantitative measure of usability, allowing for easy comparison across different design iterations or even against competitor products.

Insight Generation: The scores and ratings lead to actionable insights. For example, steps with high-difficulty ratings might be flagged for redesign or further user testing.

How to Conduct a PURE Review

Conducting a PURE review involves a systematic approach. Here's a step-by-step guide:

Define User Tasks: Start by listing down all the primary tasks a user would perform on the product or interface. For a shopping app, tasks might include "Search for a product," "Add to cart," and "Complete purchase."

Break Down Tasks: For each task, list down the sequential steps a user would take. For "Search for a product," steps might include "Open app," "Navigate to search bar," "Enter product name," and "View search results."

Rate Each Step: UX experts individually rate each step based on expected user difficulty. This rating is typically done on a scale with descriptors like easy, moderate, or difficult.

Assign Numerical Scores: Convert the qualitative ratings into quantitative scores. For instance, "easy" might translate to a score of 1, while "difficult" might be a score of 3,4, or 5.

Aggregate Scores: For each task, aggregate the scores of its steps to get a total task difficulty score. This provides a holistic view of the task's usability. Learn more about how I approach scoring in the article.

Analyze and Act: Review the scores to identify potential usability issues. Steps or tasks with high scores might need redesigning or further investigation. The goal is to lower these scores, thereby improving usability.

Iterate: After making design changes, conduct another PURE review to assess improvements. The quantitative nature of PURE allows for easy comparison between pre and post-design changes.

Why Isn't PURE More Popular?

I've seen such enormous success with the PURE method over the last five years that I often ask myself why others aren't using it for themselves. One reason might be that heuristic evaluations have been a mainstay in the UX field for decades. Jakob Nielsen and Rolf Molich did the foundational work on them in the 1990's. This long-standing presence has cemented heuristic evaluations as a go-to method for many UX professionals. In contrast, PURE, introduced by Rohrer et al. in 2016, is a newer method and may not be as well-known or understood in the UX community. For example, a quick search for heuristic evaluations/expert reviews

Another factor contributing to the slower adoption of PURE reviews is the complexity of the method, particularly the scoring system. While most other usability evaluation methods are straightforward and easy to execute, PURE requires a deeper understanding of its scoring system.

From my experience, a seasoned UX professional can grasp how to conduct a valid PURE review after observing it just once.

However, this initial learning curve might deter teams from adopting the method.

Additionally, organizations that are currently using PURE are not widely sharing their experiences or methodologies. This lack of public discourse contrasts with heuristic evaluations, which originated in academia and have been extensively documented in publicly available papers. PURE, on the other hand, was developed by active UX practitioners in the field, making publicly available information about the method scarcer and less thorough. This scarcity of information and the method's complexity could be significant barriers to its widespread adoption.

If my subscriber's curiosity is any indication of the future, perhaps the PURE method is on the rise. If so, I hope articles like this one can facilitate quick and easy adoption. I know I wished I had a resource like this when I started experimenting with conducting PURE reviews.

6 Reasons To Use PURE

Now that we understand what PURE is, how to conduct a PURE review, and why it's not more widely adopted, let's look at the six primary reasons I favor PURE reviews over heuristic reviews for most UX teams. But first, here's a list of disclaimers to further set the stage.

Disclaimers:

I still appreciate heuristic reviews, and there are situations where they might be more appropriate than a PURE review. Heuristic reviews are preferable when you:

Are the sole member of a UX team. A proper PURE review requires at least two additional UX professionals. (Though I've conducted them with fewer and still found success in real-world scenarios.)

Must provide detailed explanations and/or screenshots of the UX errors found. (While PURE reviews include this information, the insights are typically scattered throughout the evaluation. That's why I often create a report summarizing the primary insights from my PURE review. It's the best of both approaches!)

Haven't pinpointed specific user tasks for evaluation. If you lack detailed user task data or scenarios, a heuristic review might be more practical.

Face capacity constraints on your team and have very tight deadlines. A drawback of PURE reviews is the need for advanced scheduling and planning to ensure the availability of UX reviewers. Although heuristic reviews might seem more flexible in theory, I've never faced this challenge in real-world applications.

Now that we got all those disclaimers out of the way, here are the six main reasons why I love using PURE reviews so much.

1) Going Beyond Traditional: The Comprehensive Depth of PURE Reviews

Heuristic reviews offer usability insights based on Jakob Nielsen's 10 usability heuristics, but they often fall short of capturing the multifaceted nature of a user's experience in the real world. Enter PURE reviews, which not only encompass the foundational principles of heuristic evaluations but also extend far beyond, offering a more holistic, user-centered approach.

Incorporating Real-World User Flows

One of the standout features of PURE reviews is their emphasis on real-world user flows. Instead of a generic evaluation, PURE reviews are rooted in actual user tasks and behaviors. This ensures that the evaluation is not just based on theoretical principles but is grounded in the practical scenarios users might encounter. Such a task-based approach ensures that the insights derived are directly relevant to the user's journey, making them more actionable.

Persona-Driven Evaluations

PURE reviews take personalization a step further by framing the evaluation within the context of a real user persona.

This persona-driven approach simulates real-world user tasks, ensuring that the evaluation is not just about usability in a vacuum but about how a specific user type would interact with the product.

By placing the user at the center of the evaluation, PURE reviews capture the nuances and specificities that might be overlooked in a more generic heuristic review.

Accounting for Task Step Count

Another unique aspect of PURE reviews is their consideration of task step count. By simulating the workflow's user friction, PURE reviews offer a little bit of insight into time-on-task, providing a more realistic assessment of the user's journey. This granularity ensures that even minor usability friction points, which might be overlooked in a traditional heuristic review, are accounted for and addressed.

Cognitive and System Load Considerations

User experience is not just about following heuristics; it's also about the cognitive and system load that a product places on its users. PURE reviews recognize this and incorporate mechanisms, such as the scoring rubric, which help evaluators consider these variables. By evaluating how a product or interface might strain a user's cognitive resources or the system's capabilities, PURE reviews offer a more comprehensive usability assessment than heuristics alone.

2) Time Efficiency: The Unparalleled Advantage of PURE

In the fast-paced world of UX research, time is of the essence. Every moment we spend evaluating a product is a moment that could be used refining, redesigning, and enhancing the user experience. This is where the PURE method shines brightly, offering a compelling advantage in terms of time efficiency.

In a series of studies I spearheaded, the evidence was undeniable: my team at Siemens Software, well-versed in various UX methodologies, consistently took 8x less time to conduct a PURE review as opposed to a traditional heuristic review. (We reduced these types of reviews from weeks to days!) But let's dive deeper into what this truly signifies.

Depth Over Duration

While the time saved is substantial, it's essential to understand that this isn't a rushed process. On the contrary, PURE evaluations allow us to dig in to the intricacies of user tasks and behaviors. Instead of merely identifying surface-level issues, we're able to dissect each task, step by step, pinpointing potential friction points and cognitive loads that might impede a user's journey.

Actionable Insights

The depth of a PURE evaluation translates into actionable insights. By understanding the nuances of each task, we can provide specific, targeted recommendations for improvement. These aren't vague suggestions based on general principles; they are precise, data-driven insights rooted in real user tasks and behaviors.

Continuous Improvement

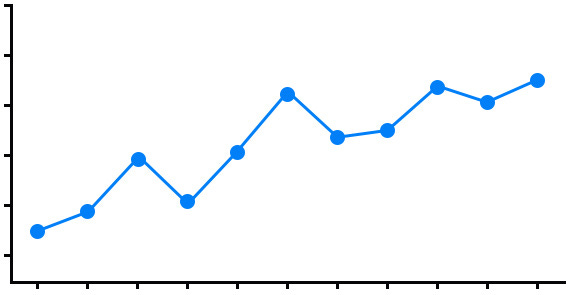

One of the standout features of PURE evaluations is the ability to track usability improvements over time. With the quantitative data it provides, we can monitor how design changes impact usability across different iterations. This iterative loop of design, assess, refine, and reassess ensures that we're always moving forward, always enhancing the user experience.

The Bigger Picture

Now, consider the cumulative effect of this time efficiency. Over the span of a project, the hours saved can be redirected towards other critical areas, be it further research, design refinements, or user testing. In essence, the PURE method doesn't just save time; it amplifies our capacity to deliver exceptional user experiences.

3) Comparative Analysis: The Dynamic Depth of PURE Evaluations

In UX research, understanding the present is only half the battle. To truly excel, we must also gauge how our designs evolve over time, ensuring that each iteration brings us closer to the pinnacle of user experience. This is where heuristic reviews often fall short, offering a mere snapshot in time. In contrast, PURE evaluations propel us into a dynamic, data-rich future.

Beyond the Static

Heuristic reviews, while undeniably valuable, tend to capture usability concerns at a specific point in time. They're akin to taking a photograph – it captures a moment, but it doesn't show the journey. PURE evaluations, with their numerical and comparable scores, are more like a time-lapse video, capturing the evolution of usability across various design stages.

The Power of Numbers

The quantitative nature of PURE evaluations cannot be overstated. By yielding numerical scores, they provide a tangible metric that can be tracked, analyzed, and compared. This isn't just about numbers; it's about understanding the story those numbers tell. Do these questions sound familiar?

Are our usability scores improving with each design iteration? Where are our user’s friction points, and how have they shifted over time?

Dynamic Progression

With PURE evaluations, the UX design process becomes a dynamic journey of continuous improvement. Instead of merely identifying issues in isolation, we can monitor how design changes impact usability scores across different iterations. This cyclical process of design, assess, refine, and reassess ensures that we're not just moving, but advancing with purpose.

Informed Decision Making

When you have comparative data at your fingertips, decision-making becomes more informed and strategic. Instead of relying on gut feelings or isolated observations, UX researchers and designers can make choices rooted in concrete data. This not only enhances the design process but also bolsters stakeholder confidence, as decisions are backed by tangible evidence.

The Bigger Picture

Imagine the potential when every design decision is informed by comparative data, when every change is measured against its predecessor, and when the entire UX team can visualize the trajectory of their efforts. This is the promise of PURE evaluations – a promise of clarity, progression, and excellence in the ever-evolving landscape of user experience.

4) Setting Benchmarks: The Evolutionary Insight of PURE Evaluations

To truly thrive in UX, we must not only identify where we stand but also chart our trajectory, setting clear benchmarks that guide our journey forward. This is where PURE evaluations shine, offering a depth of longitudinal analysis that traditional heuristic reviews can't hope to rival.

The Evolutionary Perspective

While heuristic reviews provide valuable insights into a product's usability at a specific moment, they lack the foresight to track changes over extended periods. PURE evaluations, with their quantifiable scores, offer a chronological map of a product's usability journey. Each score, each data point, becomes a milestone, marking the product's evolution and growth.

Benchmarks as Beacons

In the vast sea of UX design, benchmarks act as guiding beacons, illuminating the path forward. By setting clear, data-driven benchmarks using PURE scores, we establish aspirational goals that drive continuous improvement. These aren't arbitrary targets but are rooted in real-world data, reflecting genuine user experiences and challenges.

Validating Design Decisions

Every design change, no matter how minor, is a decision that can impact user experience. With PURE evaluations, we can measure the efficacy of these decisions against established benchmarks. Do these questions sound familiar?

Did the latest design iteration even improve usability scores? How does it compare to previous versions?

Such comparative insights validate our choices, ensuring we always move in the right direction.

Strategic Long-Term Planning

The longitudinal power of PURE evaluations extends beyond mere validation. By analyzing usability scores over time, we can forecast trends, anticipate challenges, and strategize for the future. This proactive approach, rooted in historical data, empowers UX teams to stay ahead of the curve, preemptively addressing potential issues before they escalate.

The Limitations of Heuristics

While heuristic reviews offer valuable insights, their static nature limits their utility in long-term planning. Without the ability to track and compare usability over time, heuristic reviews offer a fragmented view, lacking the continuity and depth that PURE evaluations provide.

5) Team Collaboration: Harnessing Diversity, Collective Wisdom, and Bias Reduction

The saying "two heads are better than one" rings especially true in UX research. With PURE evaluations, however, we're elevating this concept by involving at least three seasoned UX professionals, each contributing their distinct expertise, insights, and perspectives. This collaborative approach is the essence of the PURE method, distinguishing it from traditional heuristic reviews.

Diverse Perspectives, Holistic Insights, and Bias Reduction

When several experts collaborate for a PURE evaluation, the outcome is a detailed collection of insights, capturing nuances that a single UX professional might have missed. Each expert, with their individual experiences and specializations, examines the product from a unique vantage point. This diversity ensures a comprehensive evaluation helps to mitigate any one person's biases skewing the review's findings.

Mitigating Designer Bias: The PURE Advantage

A fundamental challenge in UX evaluations is designer bias. It's a well-acknowledged principle that designers should ideally not review their own creations, as proximity to a project can cloud objectivity. The PURE method inherently addresses this by involving at least three UX professionals in the review. Even if circumstances don't allow for all three reviewers to be distinct from the designer, the method ensures that at least two unbiased perspectives are included, offering a more balanced evaluation.

Checking Biases and Leveraging Strengths

Every evaluator, regardless of their experience, can occasionally be influenced by personal biases. Within the collaborative framework of PURE evaluations, team members serve as counterbalances for one another. They challenge assumptions, scrutinize conclusions, and ensure the assessment remains grounded. Additionally, each expert taps into their specific strengths, whether in cognitive psychology, interaction design, or user behavior, ensuring a thorough and encompassing evaluation.

Accelerated Reviews Through Shared Understanding

As teams consistently engage in PURE evaluations, they cultivate a shared understanding, almost like a common dialect. This mutual grasp expedites the review process. Over successive evaluations, team members begin to predict each other's viewpoints, proactively address potential concerns, and refine discussions. It's a synergy that transcends mere speed, stemming from genuine collaboration.

Building a Cohesive UX Team

Beyond the immediate advantages of a meticulous evaluation, the collaborative essence of PURE reviews nurtures team unity. Through discussions, debates, and consensus-building, team members solidify their professional relationships. This alignment, nurtured over numerous reviews, not only amplifies the caliber of evaluations but also promotes a culture of ongoing learning and mutual respect.

I've witnessed the transformative power of PURE on UX teams, from tech giants to mid-tier software firms and agile startups.

6) Statistical Reliability: The Precision of Collective Expertise in PURE Evaluations

For researchers, precision and dependability of evaluations are not just desirable but essential. These evaluations shape design choices, user interactions, and the eventual success of a product. PURE evaluations stand out in this context, offering unparalleled statistical reliability, amplified by the collective expertise of several UX professionals.

The Precision of Collective Scoring

While traditional heuristic evaluations, typically conducted by a lone expert, can be influenced by individual biases and subjective views, PURE evaluations involve multiple experts scoring independently. This approach ensures the final score is a balanced representation, devoid of individual biases. It's a practical application of the "wisdom of the crowd" principle, where collective judgment often trumps individual opinions.

Consistent High Inter-rater Reliability

The reliability of PURE evaluations is further evidenced by the consistently high inter-rater reliability scores they achieve. For instance, a PURE evaluation at Bowery Valuation yielded an inter-rater reliability score of 0.98, indicating a 98% agreement across fifteen real-world user tasks. Such impressive scores are not outliers but are regularly observed, highlighting the method's consistency and trustworthiness.

Data-Driven Validation of PURE's Reliability

PURE evaluations not only provide directionally accurate results but also showcase commendable validity and reliability scores. A comparative study between PURE results and metrics from a usability-benchmarking study on a similar product found significant correlations with established ease-of-use survey measures. Specifically, PURE correlated at 0.5 (p <0.05) with the SEQ measure and 0.4 (p < 0.01) with the SUS measure. These statistics confirm PURE's substantial validity when compared with standard quantitative metrics at statistically significant levels.

Furthermore, inter-rater reliability calculations for PURE have ranged from 0.5 to 0.9. Notably, scores typically exceed 0.8 after expert raters undergo training on the method. A 2016 article titled "Practical Usability Rating by Experts (PURE): A Pragmatic Approach for Scoring Product Usability" dives deeper into this, presenting the first documented case study of the PURE method in action.

Consistency Over Time

The world of UX is dynamic, with products evolving and user behaviors shifting. Yet, despite these changes, PURE evaluations have consistently delivered high-reliability scores over the years. This consistency is a testament to the method's robustness and ability to adapt to changing UX landscapes.

Informed Decisions with Confidence

With such high reliability, UX teams can make design decisions with greater confidence. They can be assured that the insights and recommendations derived from PURE evaluations are not opinion-based but are grounded in rigorous, collective analysis.

Conclusion

The PURE method stands out for its efficiency, reliability, and emphasis on collaboration. It transcends the limitations of traditional heuristic reviews by offering time-efficient evaluations, enabling comparative analysis across design iterations, and setting robust benchmarks for usability progression. The collaborative nature of PURE evaluations fosters team alignment, leveraging the strengths of multiple experts to ensure comprehensive assessments. This collective approach not only checks individual biases but also accelerates the review process over time due to the shared understanding within the team. Furthermore, the involvement of multiple UX professionals in scoring bolsters the statistical reliability of PURE evaluations, as evidenced by consistently high inter-rater reliability scores. The PURE method's task-based, quantitative approach provides a clear, actionable roadmap for UX improvements. As we navigate the complexities of user experience design, embracing the PURE method is not just a recommendation—it's a strategic imperative for any UX researcher aiming for excellence.

Teaser

This post was designed to expand my thoughts on the PURE method. I'm looking forward to sharing more in future articles. You can learn about the custom PURE scoring system used by over 10 UX teams in my previous article, 'Expanding the PURE Method to Evaluate Complex Designs.' Additionally, I am working on a thorough article that will give you step-by-step instructions on how to use custom PURE templates I've developed for conducting PURE reviews efficiently at scale.

References

Rohrer, C., Wendt, J., Sauro, J. Boyle, F., Cole, S. (2016). Practical Usability Rating by Experts (PURE): A Pragmatic Approach for Scoring Product Usability.

Rohrer, C. (2017). Quantifying and Comparing Ease of Use Without Breaking the Bank

J., Sauro. (2017). Practical Usability Rating by Experts (PURE)

Card, Moran, and Newell (1983). The Psychology of Human-Computer Interaction

Molich, R., and Nielsen, J. (1990). Improving a human-computer dialogue, Communications of the ACM 33, 3 (March), 338-348.

Nielsen, J. (1994b). Heuristic evaluation. In Nielsen, J., and Mack, R.L. (Eds.), Usability Inspection Methods, John Wiley & Sons, New York, NY.